The ability to transmit and acquire information through a means of communication may be our species greatest quality. The first step on this journey was the evolutionary development of spoken language. For that, we thank nature. The second influential step was the development of writing. While speaking and writing were effective, they were unable to mass produce information. The invention of the printing press, possibly the most important achievement in the history of science, changed the landscape and ushered in a scientific revolution that changed the world. However printing still worked at a speed that humans could comprehend. The next frontier was in electronics and that worked at the speed of light. The invention of the transistor made electronics a practical reality and reaffirmed that good things do come in small packages.

(Credit: Amazon.com)

Electronics Before the Transistor

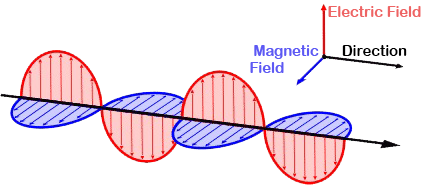

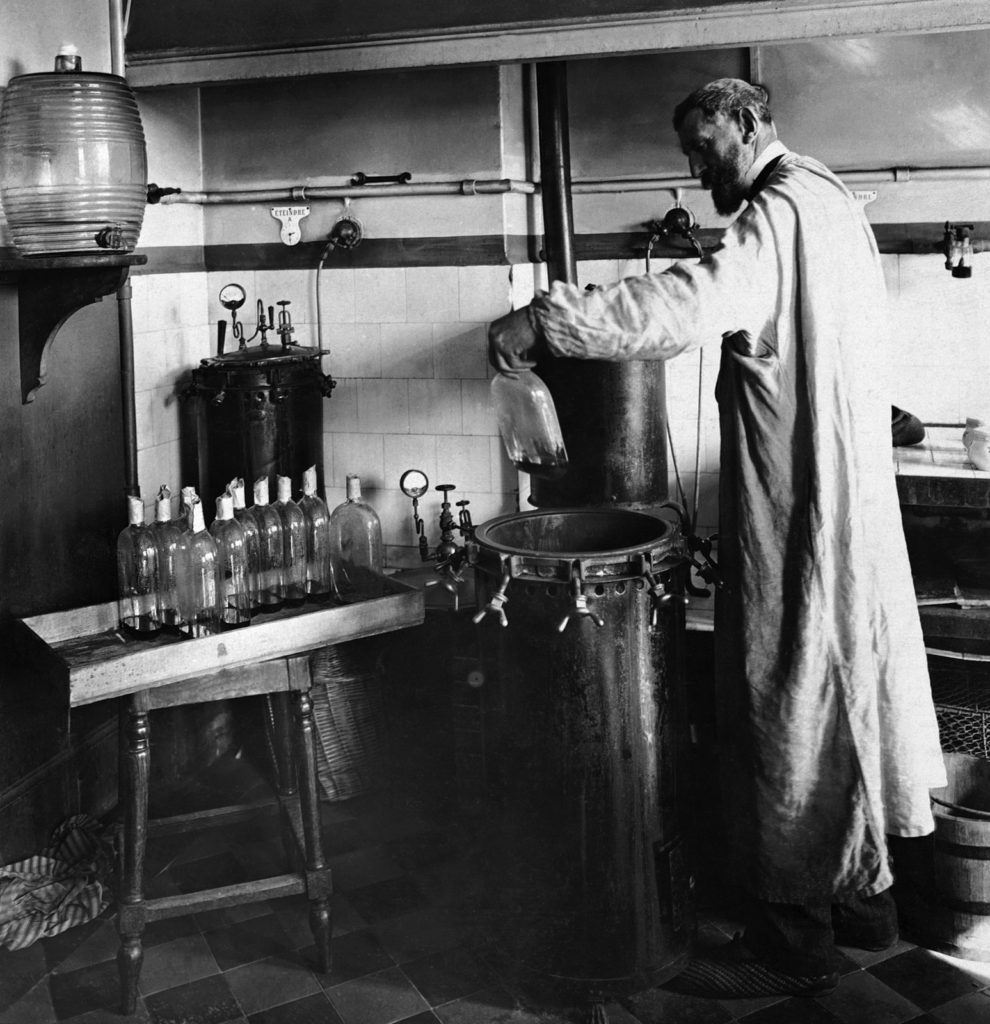

Electronic devices were in use well before the invention of the transistor. The problem with these devices was that they were powered by large structures called vacuum tubes. A vacuum tube looks like a medium sized light bulb and consisted of two electrodes called a cathode and an anode placed on opposite ends of a closed glass tube. The cathode is heated causing electrons to be released which flow through the vacuum in the tube to the anode, creating a current. Eventually a third electrode called the control grid was added to these tubes to control the flow of electrons from the cathode to the anode.

The earliest computers were actually made from vacuum tubes. These computers were enormous in size sometimes requiring a full room to house them. They generated a tremendous amount of heat and required a huge amount of energy to power them. The first large scale, general purpose vacuum tube computer was the ENIAC – Electronic Numerical Integrator and Computer – designed and constructed in 1945 by the United States Army. By the end of its use in 1956 it consisted of over 20,000 tubes, weighed 27 tons, occupied 1,800 square feet and consumed 150 kilowatts of electricity. Instead of 20,000 tubes, today’s much more powerful electric devices such as smartphones consist of billions of transistors. Imagine trying to pack a billion vacuum tubes inside your smartphone.

(Credit: Wikimedia Commons)

The Invention of the Transistor

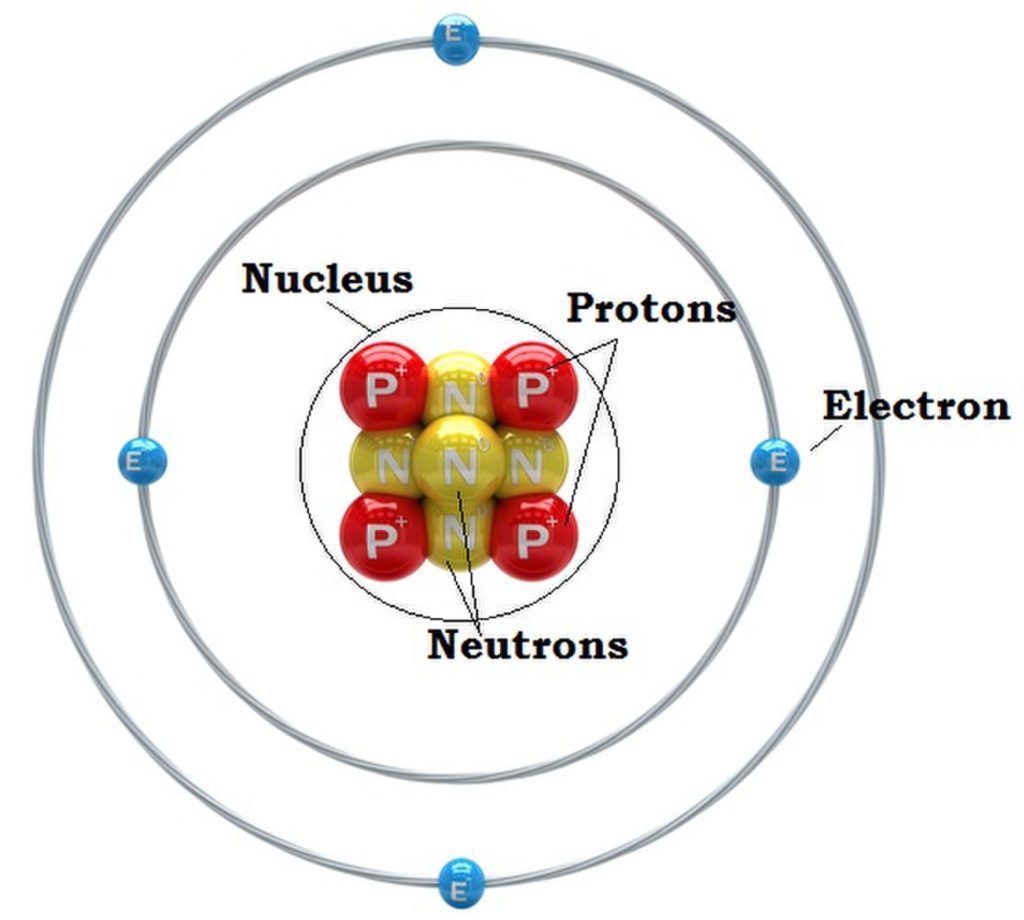

For electronics to really take off a new device that was substantially smaller and consumed less power was needed. The transistor was invented by John Bardeen, Walter Brattain and William Shockley of Bell Labs in 1947 as a replacement for the inefficient vacuum tube. The concept of the transistor is based on the field-effect transistor (FET) principle which uses electronic fields to control the flow of electricity. The theory was first patented by physicist Julius Edgar Lilenfield in 1925. Shockley was familiar with FET and had been working on the idea for over a decade. Unable to produce a functioning transistor, Bell Labs teamed up Shockley with Brattain and Bardeen. Within two years they were able to construct a working transistor which they first demonstrated to the world on December 23, 1947.

The design of the transistor has been improved over time. The first transistors were constructed from germanium, a chemical element with similar properties to silicon. In 1965 Gordon Moore (the co-founder of Intel) published a paper describing the doubling of transistors which he revised into what is now known as Moore’s Law. Moore’s Law is his observation which states that every two years the number of transistors will double on a circuit, and the observation has roughly held for four decades.

The Transistors Impact on Technology

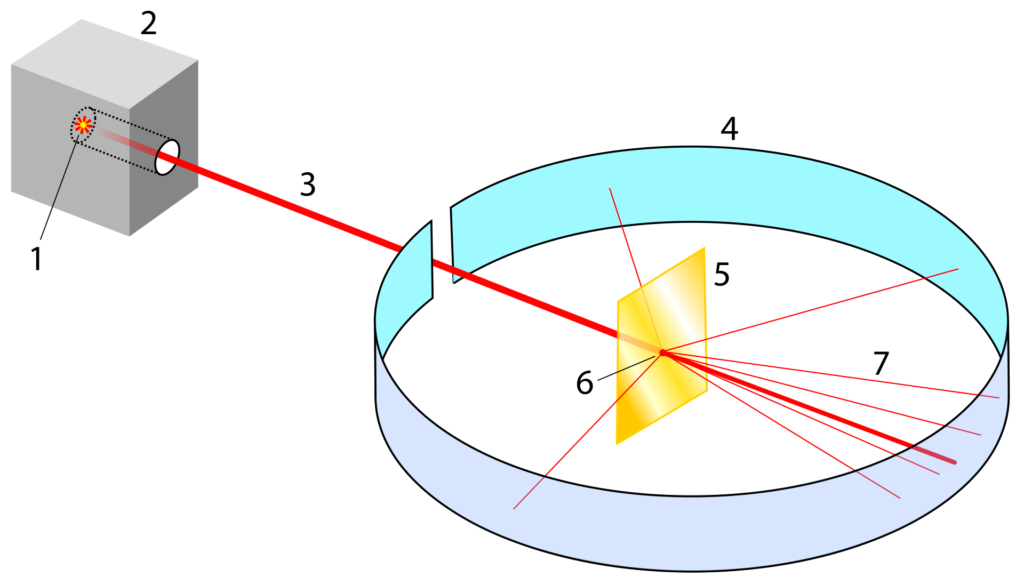

Transistors and vacuum tubes function the same in that they amplify and control the flow of electrical signals in a current. The difference lay in their size, heat generation, and power requirements. A transistor generates very little heat and requires a small power requirement. Importantly, it can be manufactures on a miniature scale. Transistor technology can be shrunk to the nanometer level with today’s transistors measuring between ten and twenty nanometers in length. That tiny size allows millions of them to be deployed in microchip technology. A microchip, also called an integrated circuit, is produced from a silicon wafer that contain millions of transistors on an area the size of a grain of rice. The compactness of these microchips make it possible to pack immense processing power into a tiny space, such as in today’s smartphones. These chips are deployed in all of our modern electronic devices. Televisions, radios, the personal computer, numerous household appliances, numerous industrial appliances all incorporate the technology of microchips whose function depends on the transistor. The transistor has unrecognizably changed the world in its 80 year history and it shutters the imagine to think what the next 80 years hold.

Continue reading more about the exciting history of science!