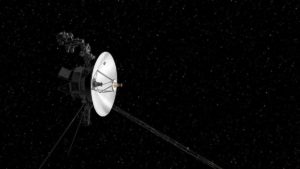

The Voyager Program represents an ambitious undertaking in exploring the boundaries of the Solar System, and beyond. The program consists of two spacecraft, Voyager 1 and Voyager 2, launched by NASA in 1977 in order to probe the four outer planets of the Solar System as well as their moons, rings, and magnetospheres.

Background and Objectives of the Voyager Space Program

The primary objective of the Voyager space program was to complete a comprehensive study of the outer edges of our Solar System. This mission was made possible due to a bit a good luck. Known as The Grand Tour, a rare geometric alignment of the four outer planets that happens roughly once every 175 years allowed for a single mission to fly by all four planets with relative ease.

Originally, the four-planet mission was deemed too expensive and difficult, and the program was only funded to conduct studies of Jupiter and Saturn. and their moons. It was known, however, that fly-by of all four planets was possible. In preparation of the mission over 10,000 trajectories were studied before two were selected that allowed for close fly-by’s of Jupiter and Saturn. The flight trajectory for Voyager 2 also allowed for the option to continue on to Uranus and Neptune.

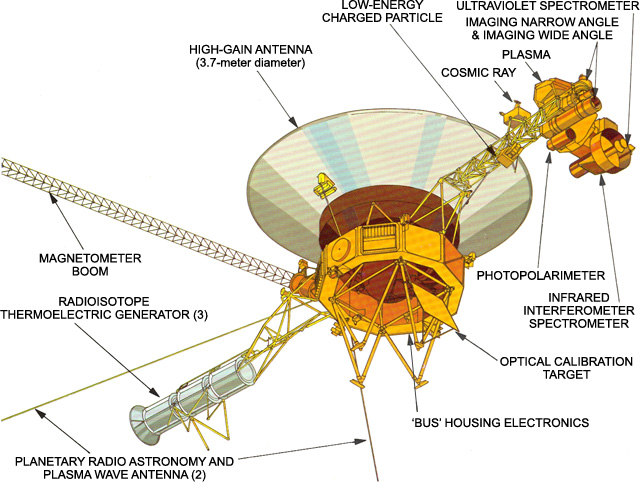

The two Voyager spacecraft are identical, each equipped with several instruments used to conduct a variety of experiments. These include television cameras, infrared and ultraviolet sensors, magnetometers, plasma detectors, among other instruments.

In addition to all of its instruments, each Voyager spacecraft carried on it an addition interesting item called the Golden Record. The Golden Record is a 12-inch gold-plated copper disk designed to be playable on a standard phonograph turntable. It was designed to be kind of time capsule, intended to communicate the story of humanity to any extraterrestrial civilization that might come across it. The Golden Record contains a variety of sounds and images intended to portray the diversity of culture on Earth. This includes:

- greetings in 55 languages, including both common and lesser-known languages.

- a collection of music from different cultures and eras including Bach, Beethoven, Peruvian panpipes and drums, Australian aborigine songs, and more

- a variety of natural sounds such as birds, wind, thunder, water waves, and human made sounds such as laughter, a baby’s cry and more.

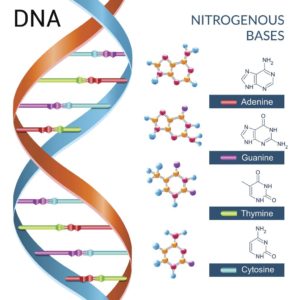

- various images such as human anatomy and DNA, plant and animal landscapes, the Solar System with its planets and more

- a “Sounds of Earth” Interstellar Message, featuring a message from President Jimmy Carter and a spoken introduction by Carl Sagan

A committee chaired by the astronomer Carl Sagan was responsible for selecting the content put on the record. The value of the Golden Record is, in Sagan’s own words:

“Billions of years from now our sun, then a distended red giant star, will have reduced Earth to a charred cinder. But the Voyager record will still be largely intact, in some other remote region of the Milky Way galaxy, preserving a murmur of an ancient civilization that once flourished — perhaps before moving on to greater deeds and other worlds — on the distant planet Earth.”

Carl Sagan

The Launch, Voyage and Discoveries

Voyager 2 was launched on August 20,1977 from the NASA Kennedy Space Center at Cape Canaveral, Florida, sixteen days earlier than Voyager 1. The year 1977 provided a rare opportunity where Jupiter, Saturn, Uranus, and Neptune were all in alignment allowing Voyager 2 to fly by each of the four planets. Voyager 1 was on a slightly different trajectory and only flew by Jupiter, Saturn, and Saturn’s largest moon Titan.

Voyager 2’s fly-by of Jupiter and Saturn produced some important discoveries. It provided detailed and close up images of both planets and its moons. While much was learned about each planet, its useful to note one important discovery of Jupiter and Saturn. On its fly-by of Jupiter, Voyager 2 revealed information on its giant red spot such as its size and structure (a complex storm with a diameter greater than the Earth!), dynamics, and its interaction with the surrounding atmosphere. On it’s fly-by of Saturn, Voyager 2 revealed information on its rings such as its structure (close up images revealed the rings are made up of countless, individual particles), dynamics, and various features.

After the success of the Jupiter and Saturn fly-by’s, NASA increased funding for Voyager 2 to fly by Uranus and finally Neptune. Currently both spacecraft are leaving the Solar System as they continue to transmit data back to Earth.

Continue reading more about the exciting history of science!