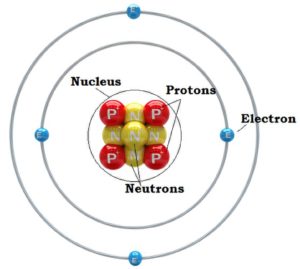

Everyone is familiar with the concept of temperature. Temperature is a way to describe how hot or cold something is. But what is it that determines how hot or cold something is?

All of matter is made of atoms, and those atoms are always moving. Temperature then, is a measure of the kinetic energy (the energy of motion) of the particles in a substance or system. The faster the atoms move, the higher the temperature. Temperature also determines the direction of heat transfer, which is always from objects of a higher temperature to objects of a lower temperature.

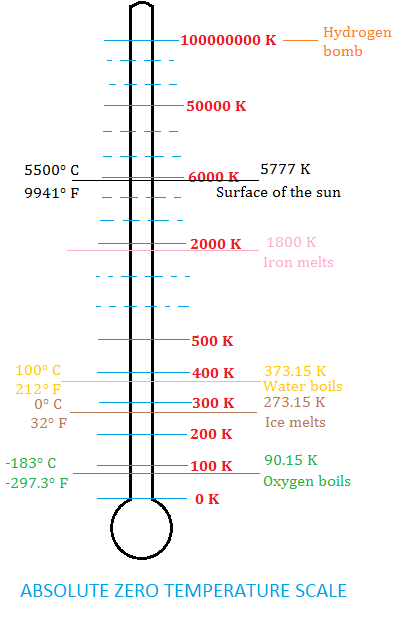

Absolute zero is the lowest temperature theoretically possible. It corresponds to a bone-chilling -459.67 degrees on the Fahrenheit scale and -273.15 on the Celsius scale. At this temperature there is the complete absence of thermal energy, as the particles of a substance have no kinetic energy.

The History of Absolute Zero

The roots of the idea of absolute zero can be traced back to the early 17th century when scientists began to explore the behavior of gases. In 1665, Robert Boyle formulated Boyle’s Law, which stated that the volume of a gas is inversely proportional to its pressure at a constant temperature. This law laid the foundation for the study of gases and eventually lead to the concept of absolute zero.

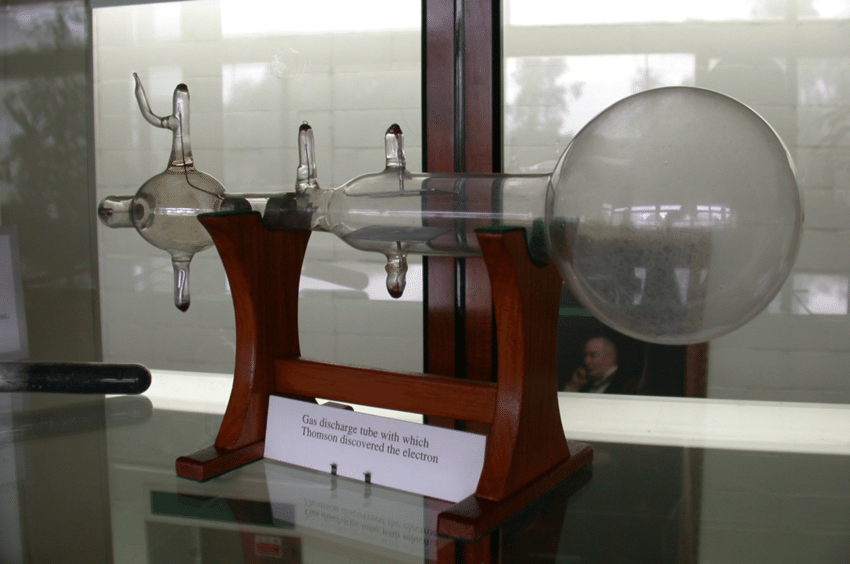

Over the next 200 years additional discoveries were made that brought scientists closer and closer to the concept of an absolute zero temperature point. Then in 1848 the distinguished British scientist, William Thomson (later Lord Kelvin), published a paper titled On an Absolute Thermometric Scale where he made the case for a new temperature scale with the lower limit to be absolute zero. At this time temperature was measured on various scales, such as Celsius and Fahrenheit scales. However these scales had certain limitations and were based on arbitrary reference points. Thomson recognized the need for a temperature scale that would provide a universal standard and be based on fundamental physical principles. Scientists could now rely on a scale for temperature measurements without the need for using negative numbers.

Thomson’s key insight was to base his new scale on the behavior of an ideal gas. According to Boyle’s Law, the pressure of an ideal gas is directly proportional to its temperature when the volume is held constant. He realized that if a gas were to be cooled to a temperature at which its volume reached zero, then this temperature would represent the absolute zero of temperature. Thomson correctly calculated its value and used Celsius as the scale’s unit increment.

Absolute zero is the temperature to which you all atoms would stop moving and kinetic energy equals zero. This temperature has never been achieved in the laboratory, but it’s been close. Sophisticated technology involving laser beams to trap clouds of atoms held together by magnetic fields generated by coils have cooled elements such as helium to within fractions of a degree of absolute zero. The current world record for the coldest temperature is held by a team of researchers at Standford University in 2015. They used sophisticated laser beams to slow rubidium atoms, cooling them to an incredible 50 trillionth of a degree, or 0.00000000005 degrees Celsius, above absolute zero! This is extremely impressive since according to theory, it is suggested that we will never be able to achieve absolute zero.

Thomson’s temperature scale was later named the Kelvin Scale in his honor, and kelvin is the International System of Units (SI) base unit of temperature.

Practical Uses of Absolute Zero

The concept of absolute zero is relevant to many modern technologies, such as cryogenics and quantum computing. Below is a summary of its applications:

- Cryogenics – cryogenics is the study of very low temperatures. cryogenics is the study of very low temperatures. Its technologies are used in various industries such as medical science, where they assist in the preservation and storage of biological materials.

- Superconductivity – superconductivity is the phenomenon where certain materials can conduct electric current with zero electrical resistance. Superconductivity is needed in several fields including medical imaging (MRI) and particle accelerator technologies.

- Quantum Computing – at very low temperatures quantum mechanical effects become more pronounced.at very low temperatures quantum mechanical effects become more pronounced. In order to create and manipulate qubits, the basic unit of quantum information, quantum computing systems require extremely low temperatures.

- Space Exploration – extremely low temperatures are encountered in deep space. Understanding the properties of materials at these temperatures is crucial for designing spacecraft components.

As you can see, absolute zero holds profound implications for various fields of study and cutting-edge technology.

Continue reading more about the exciting history of science!