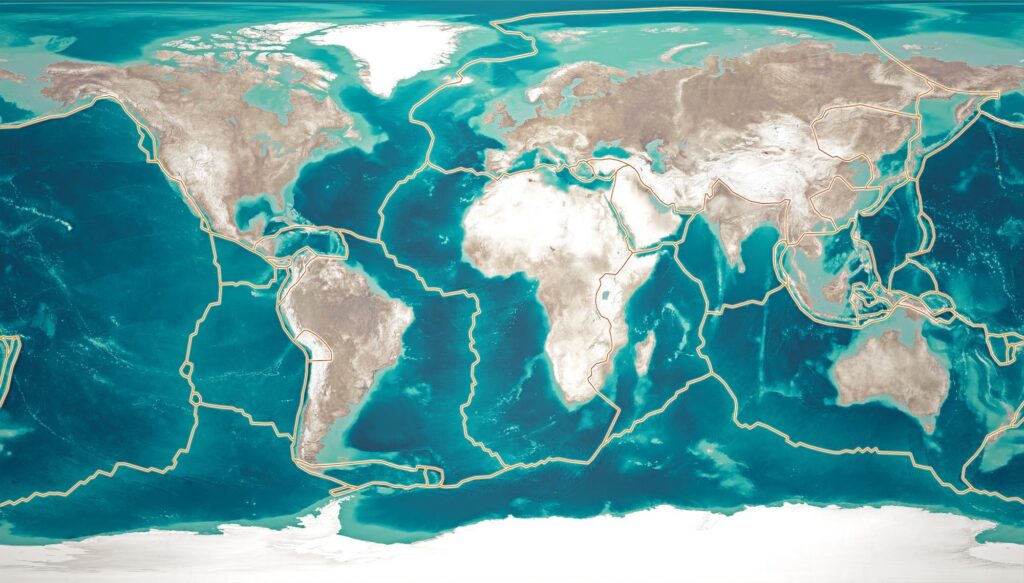

Plate tectonics is a scientific theory that explains the movement of the Earths surface and many of its most prominent geological features. It is responsible in forming the deepest trench in the ocean to the tallest mountain on land – and in the ocean as we’ll soon see. The uppermost layer of the Earth is called the lithosphere and is composed of large rocky plates that are on top of a lower molten layer of rock called the asthenosphere. A convection current is generated between the two layers, causing the plates to glide at a rate of a few centimeters per year. While it may not seem like much, given enough time this process has formed many of the geologic features of our planet. It has resulted in the formation of the Himalayas and the separation of the South American and African continents.

(Credit: National Geographic)

History of Plate Tectonics

The theory of plate tectonics is a relatively new idea, however fragments of ideas can be found in earlier times. In 1596 cartographer Abraham Ortelius observed that the coastlines of Africa and South America could be fitted together like pieces of a jigsaw puzzle. He speculated that the continents may once have been joined, but have since been ripped apart by earthquakes or a flood. Creationist have ceased on this idea to “prove” the existence of Noah’s Flood. It does provide for an arresting image, as Richard Dawkins points out, of South America and Africa racing away from each other at the speed of a tidal wave. In the 1850s there was a noticeable correlation of rock type and fossils discovered in coal deposits across the two continents. 1872 saw the mapping of the Mid-Atlantic ridge.

In the early 20th century a few versions of a continental drift began making an appearance but there was one that stood out in its influence and the development of the Earth sciences. In 1912 Alfred Wegener published two papers where he proposed his controversial theory on Continental Drift. He suggested that in the past all landmasses were arranged together into a supercontinent that he called Pangaea (meaning “all lands”). However he did not have a geological mechanism for how the landmasses drifted apart and the idea was met with much skepticism first. Over a period of decades Wegener continued to amass evidence for his theory and continued to promote his model of continental drift. He gathered evidence from paleoclimatology to provide a further boost to the idea. He noticed glaciations in the distant past occurring simultaneously on continents that were not connected to each other and were outside the polar region. Unfortunately, while in Greenland on an expedition for some of this data, Many of his specific details have turned out to be incorrect but his overall concept that the continents were not static and did move over time has been proven correct.

During the middle of the 20th century more evidence began to support the idea that the continents did move. Huge mountain ranges were being mapped on the ocean floor. It was assumed by both supporters and opponents of continental drift that the sea floor was ancient and would be covered with huge layers of sediment from the continent. When samples were finally obtained it showed all of this was wrong. There was hardly any sediment and that the rocks were young, with the youngest rocks being found near the ocean ridges. In 1960 the American geologist Henry Hess pieced all of this evidence together in his theory of sea-floor spreading. The ocean ridges were produced by molten lava rising from the asthenosphere. As it rose to the surface the magma cooled, forming the young rocks, and spreading the ocean floor in conveyor belt like motion through the slow process of convection. While some parts of the Earth were creating new oceanic crust and spreading the continents apart, other parts were doing just the opposite. Along the western edge of the Pacific for example, thin layers of oceanic crust were being forced beneath the thicker layer of crust, being driven down into the mantle below. This explains the presence and high frequency of earthquakes and volcano’s in places like Japan.

By the beginning of 1967 the evidence of continental drift and sea-floor spreading was quite strong and ready to be assembled into a complete package. That year Dan McKenzie and his colleague Robert Parker published a paper in Nature introducing the term plate tectonics for the overall package of ideas that describe how the Earth’s plates move and the resulting geological features it creates. To this day many details are still being flushed out but this moment marked a revolution in the Earth Sciences. It is relevant now only in geology but also in its synthesis with the evolution of life on Earth.

Continue reading more about the exciting history of science!