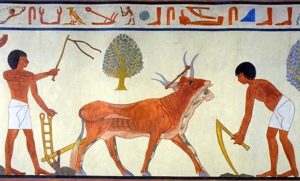

The human domestication of plants was the single most influential event in modern human history. It is the demarcation of the nomadic lifestyle to the settled, urban lifestyle. Its impacts can be summarized by the agricultural revolution, resulting in a tremendous spike in food production. The spike in food production led to larger and larger populations, the birth of city states, which marked the dawn of civilization. The domestication of plants, coinciding with the domestication of animals, has profoundly changed the course of humanity.

When and Where did the Domestication of Plants Happen?

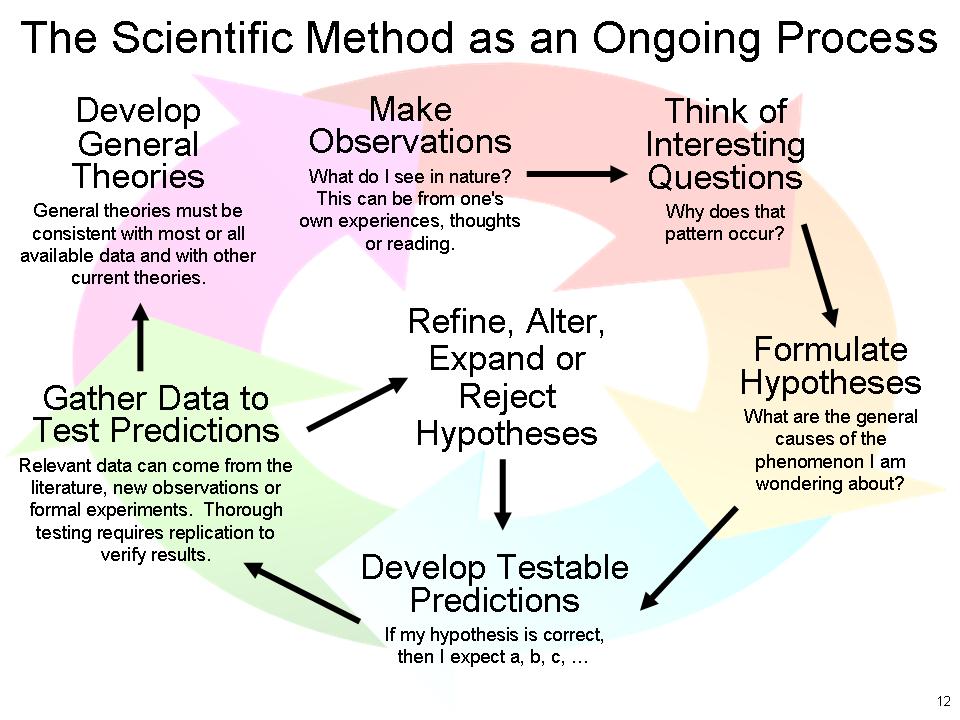

Plant domestication is the alteration of wild plant species into crop plants by human, what can be called artificial selection. The original techniques were likely stumbled upon by accident and the process leading to agriculture was certainly a very slow and gradual one. The earliest domestication of plants followed by the transition to agriculture can be thought of as an evolutionary process rather than an intentional discovery.

We have only fragmentary evidence of its beginnings since it began thousands of years before writing was invented. The earliest evidence suggests that plant domestication began around 12000 BCE with cereal crops. The location was in the area between the Tigris and Euphrates rivers in the Middle East. Other area’s of the globe soon independently domesticated other crops. Rice was domesticated in China and maize (corn) was domesticated in America’s all by around 10000 BCE. Herbs such as coriander, vegetables such as sweet potatoes and lentils, and fruits such as figs and plums were also being domesticated by around the same time.

How Did it all Happen?

The process of plant domestication was a complex, slow, and gradual process. In a few places it happened independently but this was a fairly rare occurrence. The most recent evidence suggests agriculture began in no more than ten places independently. The exact number is still debated due to incomplete and inconclusive evidence. Mostly it spread to other areas of the globe through cultural diffusion.

The road to the domestication of plants was long and curvy, full of cliffs and dead ends. It involved centuries of trial and error and was subject to local climate, geography, and available plant species. However a few notable factors seem to have been important in its evolutionary process.

- The decline of wild animal populations – By around 13000 BCE humans were becoming extremely proficient hunters and large game was beginning to thin out. This made hunting increasingly less rewarding and alternative food strategies increasingly more rewarding.

- An increasing abundance of wild edible plants due to a change in climate – Around 13000 BCE the Earth began warming resulting in increased plant life. This made eating plants increasingly more rewarding and provided more opportunities for learning by trial and error by peoples in locations with the highest proportions of these plants.

- The cumulative development of food production technologies – In some area’s edible plants were so abundant that people could abandon a nomadic was of life and establish permanent settlements. This provided the opportunity to develop food storage, tools, and production methods.

- Population growth led to new food production strategies – The abundance of wild plants led to a surge in populations. This demanded new ways to feed the population. This creates what is none as an auto-catalytic process.

Completing the Process and Establishing a New Way of Life

When humans began moving around less they noticed changes in plant life much better. Some plants were evidently dropped on the trip back to camp. It wasn’t long before people noticed that new plants soon began growing along these well-worn trails. Also, the garbage dumps of food became breeding grounds for plant growth in the following seasons. Some plants require seeds to be spat out and plants began growing in these spots also.

Soon the connection was made between planting these seeds and the growth of crops. Over time this process was refined and improved, and new species of plants were tested. Some species of plants were more easily domesticated than other species but these weren’t distributed across the world evenly, which is why some societies invented agriculture and others didn’t. This new process of controlling nature to grown your own food soon allowed societies to grown a population so large that only agriculture could support them. Life started revolving around agriculture. These larger society with a greater population were able to conquer or assimilate their neighbors spreading the domestication of plants through cultural diffusion. The age of the hunter-gather was ending and the rise of civilization had begun!

Continue reading more about the exciting history of science!